| Table of Contents | ||

|---|---|---|

|

...

Usability guru Jared Spool has written extensively about the scent of information – how users hunt through a site, click by click, to find the content they’re looking for. Tree testing helps us deliver a strong scent by improving:

...

Anyone who’s watched a spy film knows that there are always false scents and red herrings to lead the hero astray. Anyone who’s run a few tree tests has probably seen the same thing – headings that lure participants to the wrong answer. We call these “evil attractors”. These are headings that lure participants down the wrong path – not just for one task, but for several different tasks.

The false scent

One of our favorite examples of an “evil attractor” comes from a tree test we ran for consumer.org.nz, a NZ consumer-review website much like Consumer Reports in the USA. Their site lists a wide range of consumer products in a tree several levels deep, and they wanted to try out a few ideas to make things easier to find as the site grew bigger.

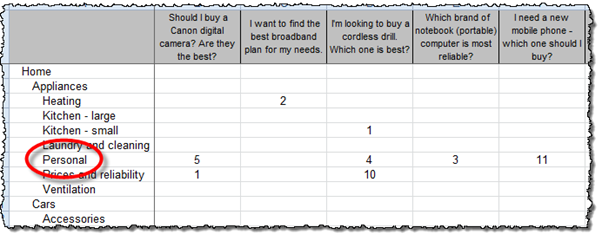

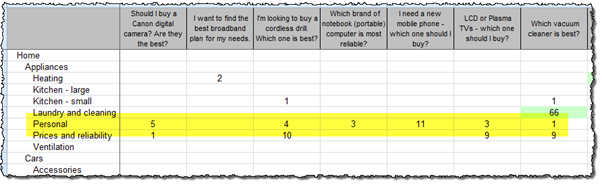

We ran the tests and got some useful answers, but we also noticed that there was one particular subheading (Home > Appliances > Personal) that got clicks from participants looking for very different things – mobile phones, vacuum cleaners, home-theater systems, and so on:

The website intended this “personal appliance” category to be for products like electric shavers and curling irons, but apparently “Personal” meant many things to our participants, because they also went there for “personal” items like mobiles and cordless drills that actually lived somewhere else.

This is the false scent, the heading that “attracts” clicks when it shouldn’t, leading participants astray. Hence this definition:

| Warning |

|---|

| Evil attractor: A heading that draws unwanted traffic in several unrelated tasks. |

...

What makes an attractor “evil”?

...

The other “evil” part of these attractors is the way they hide in the shadows. Most of the time, they don’t get the lion’s share of traffic for a given task; instead, they’ll siphon off 5-10% of the responses, luring away a fraction of users who might otherwise have found the right answer.

Spotting an evil attractor

...

We use the same results view that we saw in the “Analyzing by user group” section above - a matrix that shows the tree down the left side and the tasks across the top. Here’s part of the view from the consumer.org.nz study:

Normally, when we look at this view, we’re looking down a column for big hits and misses for a specific task. To look for evil attractors, however, welook for patterns across rows. In other words, we looking horizontally, not vertically.

...

So yes, in this case, we seem to have caught an evil attractor red-handed. “Personal” is clearly a heading that’s getting steady traffic when it shouldn’t.

Why do they happen?

It’s usually not hard to figure out why an item in our tree is an evil attractor. In almost all cases, it’s because the item is vague or ambiguous – something that could mean a lot of different things to a lot of different people.

...

Individually, those choices may be defensible, but as information architects, are we really going to group mobile phones with vacuum cleaners? The “personal” link between them is tenuous at best.

How do we get rid of them?

...

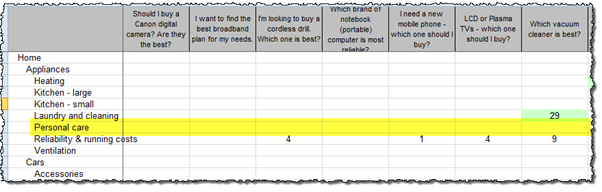

In the second round of tree testing, among the other changes we made to the tree, we replaced “Personal” with “Personal care”. A few days later, the results confirmed our thinking – our former evil attractor was no longer luring participants away from the correct answers:

Evil attractors as waypoints

...

- Top-level headings are usually easy to check for evil attractors, if the tool we’re using has a way of highlighting “first clicks”. If we see a level-1 topic getting lots of incorrect clicks across several tasks, chances are that it’s an evil attractor.

A very common culprit is an “other stuff” heading like Resources. It’s so general that it will get all kinds of traffic for all kinds of reasons. - Mid-level headings typically need a bit more detective work to see if they’re evil attractors. Usually this means eyeballing all of our task results to see if certain mid-level topics are luring clicks when they shouldn’t. For more, see Where they went earlier in this chapter.

...

Next: Chapter 12 - key points