Results from a single tree

If we only tested a single tree in our first round, then there are two likely outcomes from that testing:

- The tree did reasonably well and just needs refining.

This means that the tree’s main organizing scheme performed reasonably well (indicated by an overall success rate around 60%), but was hurt by specific weaknesses that we think we can fix. - The tree performed poorly and needs major rethinking.

In this case, we decide that the tree’s approach is just not the right one, and trying to revise it would just result in a lot of work to get to “mediocre”. Best to try another tree (which means starting over). This is why we recommend testing several trees instead of one.

Results from competing trees

If we tested several alternative trees (as we recommended In The design phase: creating new trees in Chapter 3), we’re obviously keen to see which performed best (so we can pursue them) and which performed worst (so we can discard them).

If the overall success rates point to a clear winner, we should go with that tree and then revise it to be even better.

If there is more than one “winner”, we can still discard the poor trees and narrow our field:

- Ideally we would revise the best trees, and pit them against each other in the next round.

- If we’re tight on time or budget, we may have to pick one of the winning trees (based on other criteria such as effort to implement) and perhaps incorporate some of the elements that made the other winners perform well (see below).

Keeping a record

As we select and discard trees and elements in them, and make changes to them, it’s a good idea to keep a record of:

- Previous versions of the trees

This is easily done by saving our old versions and making our changes to a new “current” copy of them. If we ever need to go back for an idea we previously discarded, it will be there waiting for us. - Decisions and our rationale for them

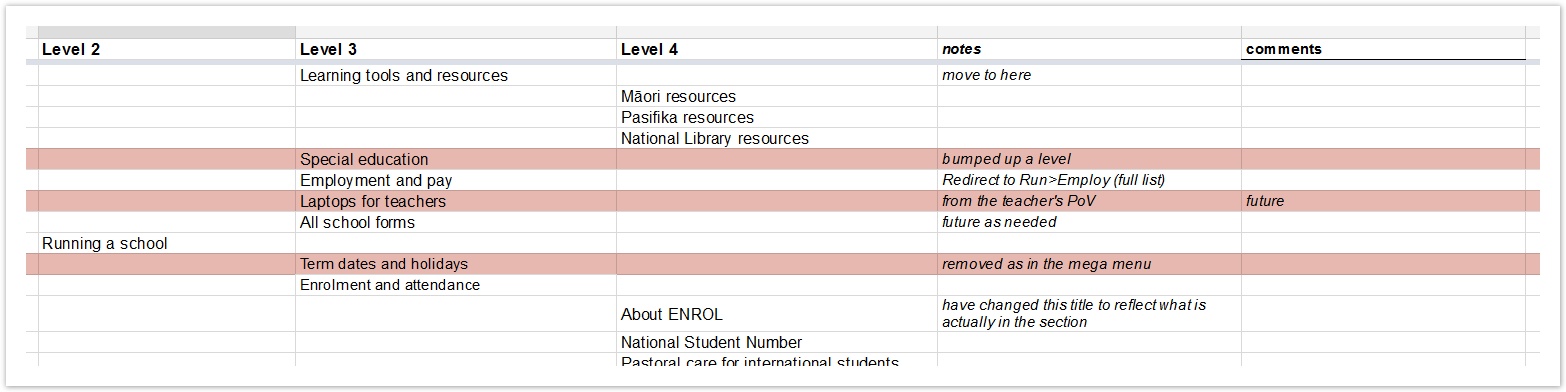

As we revise and reword individual items or entire sections of a tree, we can annotate them with our decisions and a brief rationale (where needed). We can also use color-coding or text styling to distinguish items that were deleted, changed, or are placeholders for future content.

In the example below, we've color-coded deleted item as red (some marked as "future" for later releases), and added rationale and instructions for other items:

Updating correct answers accordingly

If we make any substantial revisions to our tree(s), we should re-test those revisions, to make sure our changes work.

As we make our revisions, we must also remember that these changes may affect the correct answers we’ve marked for our tasks.

- If we change the tree, the location of correct answers may move (for example, FAQ was under Contact us, but is now under Support).

Or, some correct answers may disappear (or new ones appear) because of our reshuffling of topics in the tree. - If we change a task, the correct answers for it may also change.

Minor tweaks of phrasing are unlikely to matter, but if we change the meaning or purpose of a task, we need to check that its marked answers are still correct and complete.

Pandering to the task

When we analyze individual tasks, especially low-scoring ones, it’s natural to want to fix the problems we discover. This usually means shuffling or rewording topics.

That’s all well and good, but we need to make sure that we’re making a change that will help the tree perform better in real-world use, not just for this single task.

If we’re considering a change to our tree to fix a low-scoring task, we should make sure to:

- Review other tasks that visit that part of the tree, look at their results, and check that our change won’t cause problems for them.

- Review other reasons that users may visit that part of the site; they may not have been important enough to become tasks in our study, but they should still be supported by the tree.

Next: Cherrypicking and hybrid trees

0 Comments