We also need to know how directly our participants found the right answer. Did they have to wander around first, or did they try a first path then change their mind, or did they know where they were going from the start?

Backtracking usually happens when a participant clicks a topic, expecting to see a certain set of subtopics, and gets something different. They then decide they’re in the wrong section, back up a level (or two), and try a different path.

If we see a lot of backtracking at a certain topic, it suggests that either:

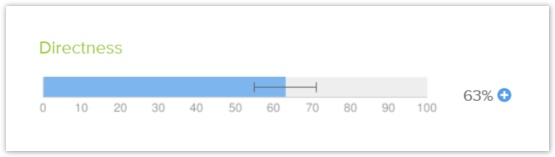

As we saw for overall scores, backtracking can be measured in several ways:

The main thing we’re looking for is a score that’s much lower than the average for the test. That means that, regardless of their success rate, participants are having a much harder time getting to where they’re going:

In any case, this is a trigger for us to dig deeper into the click paths to see what’s really going on.

When we analyze a given task, we may find topics that attract a lot of clicks. We hope that the correct paths draw the most traffic, but often we find that many participants are choosing an incorrect topic.

Here's an example from InternetNZ, an Internet-advocacy organization. The task is:

Learn more about the penalties for sharing files illegally.

In the "pie tree" diagram below, note how several participants chose "Law and the Internet". Most of them then backed up (the blue segment of the pie) when they didn't like the subheadings under it. "Law and the Internet" attracted their clicks (understandably), but the subheadings showed them they were in the wrong place:

Sometimes this is just a “one off”, where the topic seems reasonable for the single task we’re analyzing. While we could “fix” this topic (by rewording it or moving it elsewhere), we need to be sure that our fix helps the tree in general (beyond this specific task), and that it won’t mess up any other scenarios that also go through this part of the tree.

Sometimes, though, we’ll see this topic attracting incorrect clicks across several tasks.

Occasionally, we may find a topic that attracts clicks when it shouldn’t, but it doesn’t seem like it has anything to do with the task. In this case, it may not be because of the topic itself, but because of its sibling topics (on the same level). If they are not clear and distinguishable from each other, the attractor topic may simply be the “best of a bad lot” – the participants’ best guess at this point in the tree. In this case, we’ll need to revise the sibling topics to make everything clearer at that level.

Sometimes a topic attracts clicks when it should – that is, it’s on a correct path – but a lot of participants then backtrack.

This usually happens because those participants then see the subtopics, scratch their respective heads, and decide they’re in the wrong place. There are two common situations here:

If we use a visualization like the "pie tree" graphs shown above, that may supply enough information for us to judge where participants got into trouble.

Sometimes, however, we need to see the actual sequence of clicking to determine how participants behaved during a task. For this, we can look at the detailed click paths for a task, usually presented in a spreadsheet like this:

This is too much to see at once, so we cut it down to the parts we're most interested in:

What we're left with is a detailed map of problems - an exact description of where participants went partly or wholly wrong:

We can now see patterns in where our tree failed, whether it's evil attractors luring clicks, or a certain heading betraying frequent backtracking.

Next: Task speed - where they slowed down