Another result we can measure is speed (time taken). There are two metrics in play here:

How long it takes participants to complete a task

How long a participant takes for each click.

In both cases, high speed suggests confidence, or at least clarity - the participant found it easy to choose between competing headings. Conversely, if they took a long time to decide, this suggests that it was harder to understand the headings, and/or harder to choose between them.

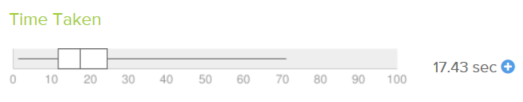

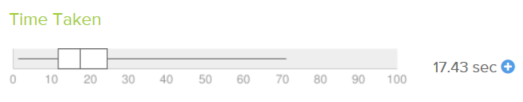

For a given task, some tools show us the average (or median) time it took participants to complete it:

By itself, this is just a number. But if the tool also provides an average time across all tasks (or if we calculate it ourselves), we can then spot which tasks took substantially longer to complete. We can then drill into these tasks to look for possible causes.

While task time is the most obvious measure for speed, it’s problematic because some answers are often deeper in the tree than others. Some tasks only take a few clicks to find (if they’re on level 3 of the tree, for example), while others make take more clicks (if they’re on level 5), so the total time is affected. For example:

If a participant takes 15 seconds for the level-3 task, that’s 3 clicks, meaning 5 seconds each, which is a bit slow.

If the same participant takes 15 seconds for the level-5 task, that’s 5 clicks, meaning 3 seconds each, which is considerably faster.

This gets even more problematic if there are two correct answers for a task – for example, one at level 3 and one at level 5.

For this reason, we recommend treating task times with a grain of salt, and trying to factor in how many clicks were involved.

Ideally, we want to flag moments when clicks slowed down – where a participant took longer than they usually do between clicks. If a participant falls below their usual “click pace” during a task, that's an indication that the participant took longer to understand their choices and make a decision.

The task’s speed score can then be calculated as the percentage of participants who didn’t slow down significantly during that task. For example, for each participant, we could decide that "slowing down" means at least one click time greater than a standard deviation from that person's average click time across the entire test.

This is a better measure of speed than the “task time” described above, because:

It’s not affected by how many clicks a task requires to find the right answer.

It’s not affected by the fact that some participants naturally click through the tasks faster than others.

This works because the speed is measured relative to a single participant. To spot a slowdown, we look for tasks where that person took a long time between clicks – and by “long”, we mean longer than that same person took for other tasks. For example:

Suppose a “slow” participant takes 5 seconds per click as they move down the tree. But then they encounter a tough choice, and take 12 seconds to click. We would flag that task (even better, that exact spot in the tree) as a slowdown for that participant, because 12 seconds is substantially longer than their average of 6 seconds.

Suppose a “fast” participant takes 2 seconds per click. When they encounter the same hard choice as above, they take 6 seconds to click. Again, we would flag that as a slowdown, because even though they did it in 6 seconds (normal speed for the first participant), it’s substantially slower than their own usual rate (2 seconds).

For a given participant, that slow spot might have been caused by any number of things – a tough choice in the tree, the doorbell ringing, anything really. But if we look at all the participants who did that task, we might see that most participants were slow at that spot. That suggests that those topics were hard to decide on for that task – a valuable thing to know.

When we spot a task with a poor speed score, we need to find out if there are specific locations in the tree that are to blame. If the tool provides a way to inspect click times for tree headings (either with a graph or raw data that we can process), we can determine which parts of the tree are bottlenecks.

Once we locate the heading where participants are slowing down, there may be several reasons why it’s a bottleneck:

One or more of its subheadings are not clear – that is, participants were unsure what those subheadings meant.

Its subheadings are not distinguishable – that is, participants could not quickly see the differences between them.

None of the subheadings “answered” the task.

If we find a lot of backtracking at this spot, this is the likely cause.

Next: Where they gave up