Most tools let you add survey questions to your tree test. This can help you in several ways:

- You can ask questions that help you analyze your results (e.g. by comparing results between groups of participants).

- You can ask questions that fill gaps in your user research (e.g. things that you didn’t ask in your last user survey).

- You can ask if participants would like to be involved in future studies for this website or product.

For screening participants

Your tool may provide the ability to screen participants based on their answers to opening questions: if they answer “correctly”, they are allowed to proceed with the test; if they don’t, they are politely dismissed.

If your tool doesn’t give you this option, there are other ways of screening participants – see Screening for specific participants in Chapter 9.

For splitting results later

Probably the most useful way to use survey questions is for filtering results later so you can compare between groups of participants.

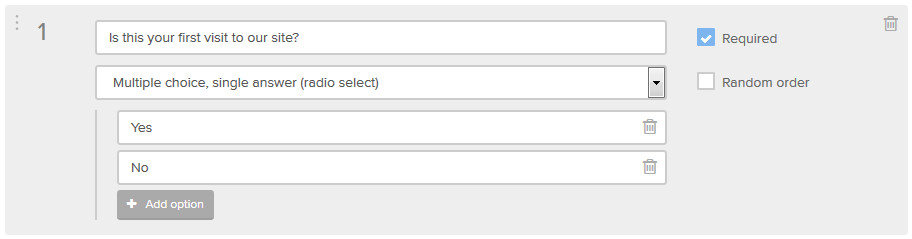

For example, if you post a web ad on your site that asks visitors to do a tree test, you may want to find out if return visitors do better than first-time visitors (presumably because they’re already familiar with your site’s structure). If you add a survey question that asks them “Is this your first visit to our site?”, you can later split up the results into two groups (yes and no) and see if and where their results differ.

Note that you do need to plan your questions carefully to make sure you’re getting the splits you want to compare.

- For more on testing different groups of users, see Different user groups in Chapter 9.

- For more on filtering results to analyze subsets of data, see Analyzing by user group or other criteria in Chapter 12.

For customer research

Good user experience depends on knowing who your users are and what makes them tick. That means that every chance we get to learn from users, we should be trying to fill gaps in what we know about them, and continually asking new questions to guide our designs.

The main purpose of a tree test, of course, is to test the tree you’ve come up with, before you build the site. However, because tree tests are very brief (typically about 5 minutes), you also have a chance to ask a few fill-in-the-gap questions.

Most tree-testing tools let you add survey questions to your tree test. Before you jump in and add a whole slew of research questions, however, consider the following:

- Questions that help you analyze the results later are usually more important than general research questions, so add those first.

- Tree-test tools typically do not offer the advanced capabilities of dedicated survey tools (such as if-then question logic and fancy reporting), so keep your “mini survey” simple.

- Don’t irritate your participants with a long list of follow-up questions. They have done what you asked by completing your tree test, and while they are probably willing to answer a few more questions, their goodwill will erode rapidly if you demand substantially more time and effort from them.

We recommend keeping the total number of questions to 5 or less. Because we usually ask some data-filtering questions to help our analysis, this leaves us room to ask 2-3 general research questions at most.

If you find yourself with 5 or 10 (or more) questions that you really want answers to, consider creating a proper online survey to run separately from your tree test. You will be happier with the features offered by a full-on survey tool, and your participants will not feel like they’ve been tricked into doing more work than they signed up for.

For identification

In most online studies, we do not need to know the individual identities of our participants. As long as they are part of our target audience, we’re simply grateful that they take the time to help us with our research.

There are cases, however, where we do need to know participants’ identities. For example, we will need their contact info if:

- We’re rewarding participants individually for participating.

- We’re running a prize draw as an incentive (a very common way to get participants for tree tests).

- We want to follow up with certain participants (based on their choices in the tree test or how they answer survey questions).

- We want to build a pool of willing participants for future studies.

For tracking individual participants/rewards

There are cases where we need to know exactly which participants have completed the tree test. For example:

- An organization may want all of its employees to do a study for a new intranet that it's planning.

- You may be using a commercial research panel where panelists are automatically rewarded once they complete the study.

In this case, you'll need a mandatory question that asks for a unique identifier from each participant:

- For commercial panels, this is often a numeric identifier that the panel assigns to each panelist.

- The identifier may be automatically passed to your tree-test tool by the panel software, in which case you just need to set up the integration of the two tools. (See your tool's docs for details.)

- If there is no automatic integration, you'll need to ask each panelist to enter the identifier that their panel gave them.

- The identifier may be automatically passed to your tree-test tool by the panel software, in which case you just need to set up the integration of the two tools. (See your tool's docs for details.)

- For other situations, you'll need to find out which identifier to use (email address is a common choice), and ask each participant for it.

For prize draws, follow-ups, and further studies

In most of the studies we run, we don't really need the contact info of every participant.

- If they're willing to give it to us (e.g. for a prize draw, for follow-ups on this study, or for future studies), that's great.

- But if they want to stay anonymous, that's fine too. We really just want their tree-test responses, and we don't want them to abandon the test because they feel they're being strong-armed in to giving up personal information.

In most cases, we typically just ask for an email address because it’s easy (one field that they type all the time) and pretty much everyone has one.

Whether they want to give us their email address is another matter, so we need to be polite about it:

- We make the email address optional.

If they don’t want to give it, that’s fine, but we still want their test results. - We promise not to spam them.

We assure them that we will not spam them (by using their email for other purposes like advertising) or share their address with other organizations.

Your email address (optional - only used for the prize draw, or if you asked to be notified about future research. No spam!)

For comments and additional feedback

Unmoderated online studies are great because they can collect data for us 24/7, but that also means that we lose the ability to converse with users directly.

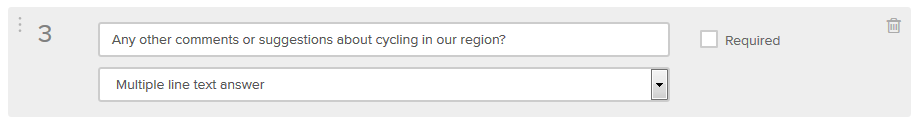

One way to get part of that benefit is to give participants an optional “comments” field where they can give feedback, ask questions, offer suggestions, and so on. It’s pretty much guaranteed that you’ll get a few snarky comments, but you’re also likely to get a few gems that give you additional insight into how your users think.

If you want to extend the conversation, you can also can ask participants if it’s OK to contact them to follow up on their test results and comments. We’ve got some of our most useful insights from talking to participants who were very critical in their feedback during the test; these are users who are typically very invested in the product/website you’re testing, and they’re pleasantly surprised to be contacted by a real person who wants to hear more about their needs and issues.

For further studies

If you’re running a tree test, it’s unlikely to be the only research you’re doing for your project. And participants can be hard to come by, so why not have one study help the next one?

We often finish our survey questions with an invitation to do future studies about this product/website. It usually looks like this:

To improve the Acme website, we're doing more studies like this over the next few months. Would you like to be notified to help us out?

In our experience, a lot of people are willing; we typically get a “yes” response rate of 40-60%.

Note that you’ll need contact info for these people (usually an email address), so you can notify them when your next study is open for business. If we already asked them for an email address for a prize draw, we just use that instead of asking for it again.

Ask before or after the test?

Some tree-testing tools let you decide when the survey questions will be presented – before the tree test, after, or some of each.

Be careful about asking questions before you present the tasks. Your questions (and any multiple-choice answers you supply) can give away information about the structure of the tree or the terminology you're using in it.

Even if your questions don't explicitly give anything away, they may still have a "priming" effect on the participant, by putting them into a particular mindset before they try your tasks.

In most cases, it’s best to ask survey questions after the tasks, because they won’t affect the tree test itself. While most questions won’t skew your results much, it’s best to be safe.

Next: Choosing a visual design